Why use Utility AI instead of traditional AI techniques like BTs, FSMs and GOAP?

After years of working with traditional condition-based AI techniques, such as Behavior Trees (BTs), Finite State Machines (FSMs), and Goal-Oriented Action Planning (GOAP), I found that they had many drawbacks. I spent a lot of time trying to work around their limitations but never found a good solution. Then I discovered Utility AI and realized it could overcome many of the limitations of these techniques. That’s what drove me to create Utility Intelligence.

Below are some disadvantages of these techniques that I encountered in the past and how Utility AI addresses them.

Temporal coupling between decisions¶

Info

In traditional condition-based AI techniques like BTs and FSMs, the agents make decisions based on conditions and the order of decisions. However, they are subject to change.

Note

Although GOAP is condition-based, it does not have temporal coupling between decisions like BTs and FSMs. This is because, in addition to conditions, GOAP selects actions based on total cost, not on the order of decisions, as in BTs and FSMs.

In BTs and FSMs, an agent makes decisions by answering one Yes-No Question at a time and in a fixed order:

- Should I move towards the enemy?

- Should I attack the enemy?

- Should I flee from the enemy?

if(ShouldMoveTowardsEnemy()) MoveToEnemy(); else if(ShouldAttackEnemy()) AttackEnemy(); else if(ShouldFleeFromEnemy()) FleeFromEnemy(); else Idle(); bool ShouldMoveTowardsEnemy() { return DistanceToEnemy > 10 && MyHealth > 50; } bool ShouldAttackEnemy() { return DistanceToEnemy < 10; } bool ShouldFleeFromEnemy() { return DistanceToEnemy < 20 && MyHealth < 20; }

For each decision, we must define the conditions that determine whether the agent will make this decision or not. And if we want to prioritize one decision over another, we have to change the order of these decisions. In the example above, if DistanceToEnemy = 5 and MyHealth = 10, the agent will decide to attack the enemy rather than flee from the enemy. But if we change the order of these two decisions, the agent will choose to flee from the enemy:

if(ShouldFleeFromEnemy())

FleeFromEnemy()

else if(ShouldAttackEnemy())

AttackEnemy()

bool ShouldAttackEnemy()

{

return DistanceToEnemy < 10;

}

bool ShouldFleeFromEnemy()

{

return DistanceToEnemy < 20 && MyHealth < 20;

}

For simple AIs, everything is okay, there aren’t any problems. However, as your AIs become more complex, they will have more decisions and their decision-making conditions will be related to a lot of factors like health, energy, distance to enemy, distance to cover, attack cooldown, etc.

Therefore, if your game design changes, it will be very time-consuming to apply these changes to your game because they might cause significant changes to the structure of BTs and FSMs, and you will have to redesign them from scratch by adding, removing, and reordering the conditions and decisions.

Additionally, testers will have to recheck every behavior of these AIs from the beginning to ensure that they behave as intended. As a result, the cost of syncing your AIs with the game design will increase over time. Eventually, you may lose the ability to change your AIs because the cost of making changes becomes too high.

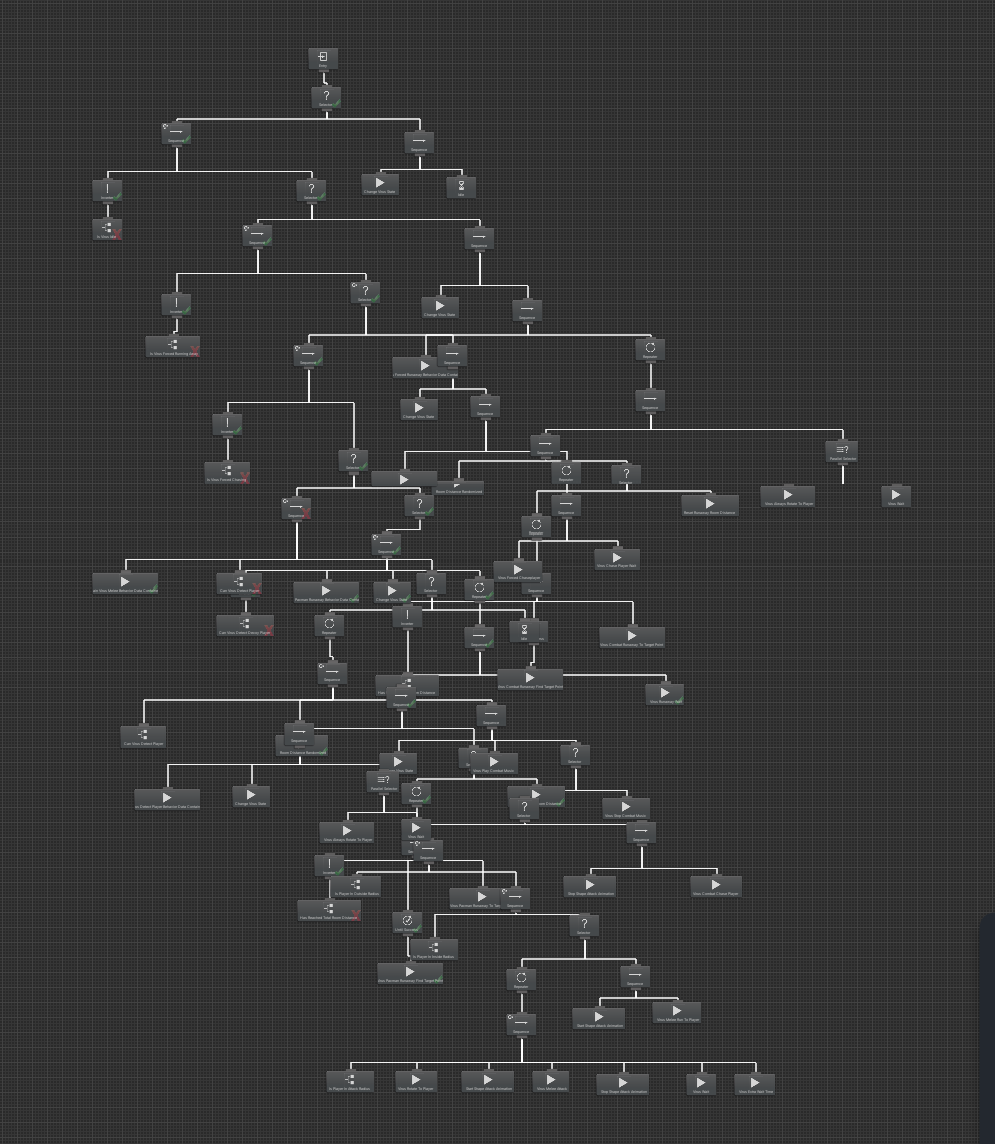

This is the behavior tree of an agent in one of my previous games:

As you can see, it’s quite complex, isn’t it? I still remember that when it became complex like this, it took a lot of my time to apply changes whenever designers altered the game design. I had to redesign my behavior trees again and again. It was a nightmare, and that’s one of the main reasons I created this plugin.

How Utility AI addresses this¶

Info

Utility AI make decisions based on scores, not on conditions and the order of decisions like BTs and FSMs. Therefore, there is no coupling between decisions, and they are independent of each other.

Unlike BTs and FSMs, the question a Utility-Based Agent need to answer is: What do I want to do the most right now? So for each decision, the agent needs to ask itself: How much do I want to take this decision at the moment? And depending on the answers, it assigns a score to each decision, compares all of those decisions to each other and select the best one with the highest score.

As a result, the order of decisions is no longer important, you don’t need to worry about the conditions and order of decisions anymore. What matters to you is simply: What is the most important thing to do at the moment? For example, if the agent health is 30, the energy is 50, the distance to the enemy is 40, what does the agent want to do the most?

- Move towards the enemy?

- Flee from the enemy?

- Attack the enemy?

Decisions are not made based on targets¶

A major drawback of traditional condition-based AI techniques like BTs, FSMs, and GOAP is that agents do not make decisions based on targets. They select the target for the current decision after the decision is made. This means their decision-making does not take targets into account. As a result, the decision-target pair selected may not be the best one.

For example, suppose we have an agent with 20 health, and there are two items nearby:

- An apple, 5m away, that restores 5 health.

- An orange, 7m away, that restores 20 health.

Which item should it go to and eat? What conditions should we add to the action to ensure the best item is selected.

Moreover, what happens if there is an enemy with 1 health and only 5m away from the agent? Obviously, the agent should go to and kill the nearby enemy instead of seek a healing item to eat.

If we use condition-based techniques, will the agent choose to kill the nearby enemy or seek a healing item to eat? What conditions do we need to add to these decisions to ensure the best decision-target pair is selected?

How Utility AI addresses this¶

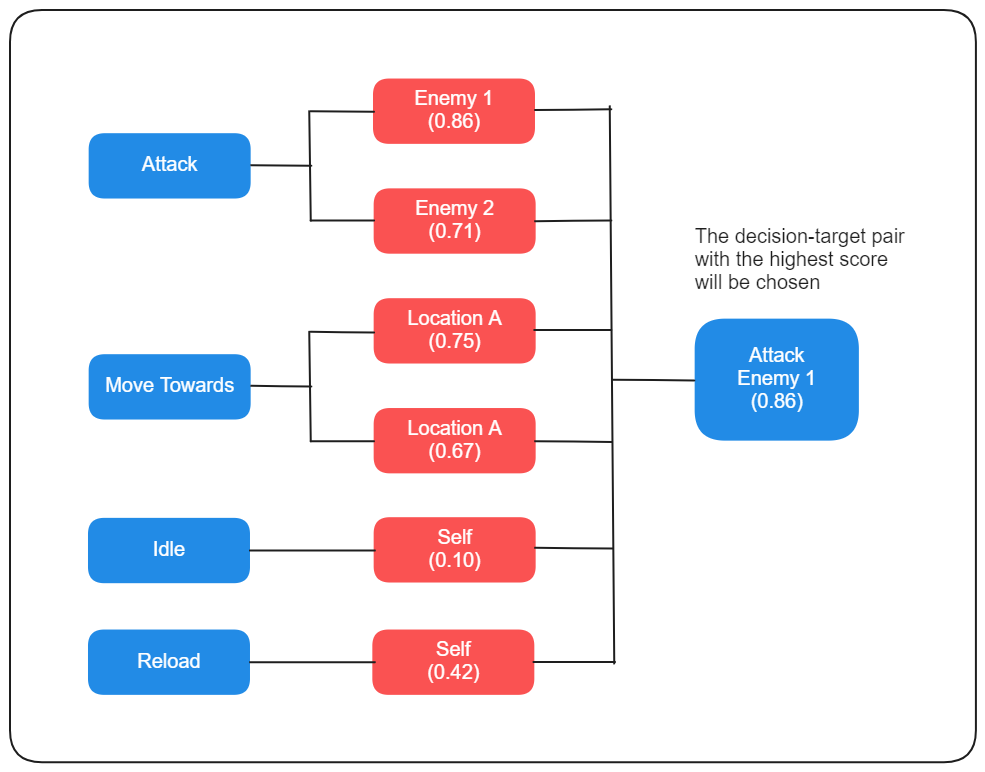

Unlike traditional techniques, Utility AI evaluate all possible decision-target pairs and select the one with the highest score to execute.

This approach ensures that the chosen decision-target pair is always the best one. That’s why utility-based agents behave far more natural than those created using traditional condition-based AI techniques.

Any target that meets the conditions can be selected for the chosen decision¶

One more drawback of traditional condition-based AI techniques is that any target that meets the conditions can be selected for the chosen decision.

For example, if we set up the attack logic of our agent as follows:

if(HasEnemiesInAttackRange()) //decision-making conditions

{

foreach(var enemy in enemies)

{

if(IsEnemyHealthLow(enemy)) //target-selection conditions

{

AttackEnemy(enemy);

break;

}

}

}

else

{

Idle()

}

bool IsEnemyHealthLow(Enemy enemy)

{

return enemy.Health <= 20;

}

Suppose that the attack range is 10m. What if the agent detects multiple enemies with Health <= 20 and within 10m? Assume that there are 3 enemies that meets the conditions:

- Enemy 1:

10maway andHealth = 20. - Enemy 2:

4maway andHealth = 10. - Enemy 3:

8maway andHealth = 5.

Which enemy would the agent attack? The result is that it will attack the Enemy 1 because it is the first one that meets the conditions, but it’s not the best choice in this case.

Surely, you can add more conditions to ensure the best enemy is always selected for the attack decision. However, you have to do this manually, and it takes a lot of your time to think about which conditions to add to ensure the best target is selected for every decision the agent makes.

Additionally, what if we add another condition IsFrozen to the target enemy selection logic like this:

if(HasEnemiesInAttackRange()) //decision-making conditions

{

foreach(var enemy in enemies)

{

if(IsEnemyHealthLow(enemy) || IsFrozen(enemy)) //target-selection conditions

{

AttackEnemy(enemy);

break;

}

}

}

else

{

Idle()

}

bool IsEnemyHealthLow(Enemy enemy)

{

return enemy.Health <= 20;

}

Once again, you will have to struggle with order; however, this time, it’s not the order of decisions but the order of conditions.

Assume that there are 2 enemies like this:

- Enemy 1:

Health = 20,IsFrozen = false - Enemy 2:

Health = 40,IsFrozen = true

In this case, the agent will attack Enemy 1 since it prioritizes LowHealth over IsFrozen. But obviously, this is not the best choice. The agent should attack Enemy 2 since it is frozen and unable to fight back.

As a result, you will have to reorder the conditions to meet your game requirements. However, as I mentioned before, conditions are subject to change, so you will have to reorder them again and again every time the game design changes.

Info

It’s not just BTs and FSMs that have this drawback, GOAP also does as well, since GOAP-based agents select the target for an action after it has been chosen.

How Utility AI addresses this¶

As mentioned above, for each decision, utility-based agents evaluate all possible targets and select the one with the highest score.

With this approach, you no longer need to worry about whether the selected target for the chosen decision is the best one. You only need to focus on scoring decision-target pairs, and the agent will automatically select the target with the highest score for its chosen decision.

Transitions between actions are fixed¶

Another drawback of traditional AI techniques like BTs and FSMs is that transitions between actions are fixed. This means we can easily predict what BT and FSM based agents will do because the next action can only be one of the predefined transitions.

stateDiagram

[*] --> Idle

Idle --> Patrolling: No Enemy Detected

Patrolling --> Chasing: Enemy Detected

Chasing --> Attack: Enemy In Range

Attack --> Chasing: Enemy Out of Range

Chasing --> Idle: Enemy LostHow Utility AI addresses this¶

Utility AI evaluates all decisions and chooses the one with the highest score to execute. The evaluation process considers many factors, such as the current world state (environment, obstacles) and other agent states (health, mana, side effects, etc.).

Since Utility AI doesn’t have transitions, any decision can be the next one, making the behaviors of utility-based agents harder to predict but still logical. So yeah, imo, Utility AI is a good choice for creating emergent behaviors.

Decision-making conditions are usually based on a threshold¶

One more drawback of traditional AI techniques that rely on conditions for decision-making, such as BTs, FSMs and GOAP is that the conditions are usually based on a threshold. Consider this decision-making logic of an enemy AI:

if(IsPlayerInAttackRange())

AttackPlayer()

else

Idle()

bool IsPlayerInAttackRange()

{

return DistanceToPlayer < 10;

}

With this decision-making logic, the enemy will suddenly attack the player when the player enters its attack range (10 m). And if the player is outside of 10 m, it won’t do anything, even though the distance from it to the player is 11 m. So if players know the attack range of each enemy, they can kill any enemy easily without losing a drop of health.

How Utility AI addresses this¶

In Utility AI, this situation is very unlikely to happen unless you intentionally do so because Utility AI measures How much I want to take this decision at the moment. So the distance to the player is 11 m just means the desire to attack the player is lower than when it is 10 m.

For example, if the player is within 10 m, the score of AttackPlayer is 1.0. Then if the distance to player is 11 m, the score of AttackPlayer will be 0.9. Therefore, regardless of whether the distance is 11 m or 10 m, if the score of AttackPlayer is greater than Idle, then AttackPlayer is still chosen.

This is also one of the reasons why utility-based agents behave more naturally compared to those using other AI techniques.

Decision-making and decision execution are part of the same process¶

Decision-making is forced to run at the same frequency as decision execution¶

A major drawback of traditional AI techniques like BTs and FSMs is that decision-making and decision execution are part of the same process, which means decision-making is forced to run at the same frequency as decision execution.

As a result, even though an agent already has the best decision for the current situation, it still has to think about What decision should I make? in order to execute it.

And if agents need to execute decisions every frame, they will have to make decisions every frame. Why do they have to make decisions when they already have the best one? It’s a waste of resources.

Imagine you’ve decided to go running, but while you’re already running, you have to keep asking yourself: Should I go running? How would you feel about that? It would be a nightmare, right?

How Utility AI addresses this¶

Utility AI is essentially a decision-making technique and is not involved in decision execution. Therefore, we can easily turn decision-making and decision execution into two separate processes and run each process at a different frequency.

For example, we can run the decision execution process every frame while running the decision-making process only every 0.1s or every 0.5s by adjusting the decision-making interval to suit your game’s needs.

Moreover, you can even distribute the decision-making workload across multiple frames or threads, or manually run the decision-making process when necessary. This approach significantly improves your game’s performance.

Note

Although these are possible, Utility Intelligence currently only supports distributing the decision-making workload across multiple frames. However, we plan to support all of these in the future.

This is difficult to achieve if you use other AI techniques since, by nature, decision-making is closely tied to decision execution in these systems, making it hard to separate.

Essentially, BTs and FSMs are made up a series of If/Else statements run sequentially.

if(ShouldMoveTowardsEnemy())

MoveToEnemy();

else if(ShouldAttackEnemy())

AttackEnemy();

else if(ShouldFleeFromEnemy())

FleeFromEnemy();

else

Idle();

How can we separate decision-making from decision execution in the code above?

Hard to debug¶

If you use traditional AI techniques as your AI solution, you might find it hard to debug why your agents make the wrong decisions as complexity increases.

Figuring out why a specific decision was not selected as expected requires understanding all the conditions and parameters involved in the decision-making process, such as health, energy, distance to target, armor, damage, etc. And it becomes harder and harder to debug as more parameters are involved.

Sure, you can easily identify the issue within seconds when only a few parameters and conditions are involved in the decision-making process. But what if there are dozens or even hundreds of parameters and conditions?

Imagine an agent has to consider 100 different parameters during decision-making time, for example:

- My health = 70

- My energy = 50

- My armor = 20

- The nearest enemy’s health = 30

- The nearest enemy’s energy = 70

- The nearest enemy’s armor = 50

- Danger = 0.7

- Slash damage = 20

- Slash force = 10

- Slash cooldown = 5

- Shoot damage = 5

- Shoot cooldown = 1

- Distance to the nearest enemy = 20

- Distance to the nearest cover = 10

- Distance to the nearest health station = 30

- …

It also has to evaluate 100 different conditions, such as:

- Am I in danger?

- Am I being attacked?

- Am I being healed?

- Is my health low or high?

- Is my energy low or high?

- Is my ammo low or high?

- Is the nearest enemy’s health low or high?

- Is the nearest enemy’s energy low or high?

- Is the nearest enemy’s ammo low or high?

- Are there any enemies nearby?

- Are there any cover nearby?

- Are there any health stations nearby?

- Are there any enemies within my attack range?

- Are there any allies within my healing range?

- Is my distance to the nearest enemy greater than or less than my distance to the nearest ally?

- Is my distance to the nearest enemy greater than or less than my distance to the nearest cover?

- Is my distance to the nearest enemy greater than or less than my distance to the nearest health station?

- ....

Finally, there are 100 possible decisions it can choose from, including:

- Flee from the enemy

- Slash enemy

- Shoot enemy

- Reload ammo

- Use special ability

- Defend

- Move to the nearest enemy

- Move to the nearest cover

- Move to the nearest health station

- Heal allies

- Heal myself

- …

Can you quickly figure out which decision is the best one in just a few seconds or minutes? If you can, congratulations, you’re among the top 1% of the smartest people in the world.

For normal people like us, we have to replay our games again and again to debug why agents make wrong decisions, and it’s very time-consuming and boring. It could take us several hours, or even days to figure out why. I experienced it many times in the past. It’s tedious and I don’t want to experience it again.

How Utility AI addresses this¶

As I mentioned earlier, Utility AI allows us separate decision-making from decision execution and turn them into two distinct processes. Therefore, we can run the decision-making process independently at editor time to preview which decision is chosen right in the Editor, without having to play your game.

So if the agent makes wrong decisions, you just need to log all the parameters involved in the decision-making process into the Console Window, then enter the values of those parameters into the Intelligence Editor to figure out the cause of the issue.

If decision-making and decision execution were part of the same process, this wouldn’t be possible because decision execution depends on the real-time state of the game world. For example, we cannot move the agent to its enemies at editor time, as the enemies are instantiated at runtime and do not exist at editor time.

Although Utility AI allows us to preview which decision is chosen at editor time, not all Utility AI frameworks support this feature. Fortunately, Utility Intelligence supports this feature, so you can preview which decision is chosen by modifying input values in the Intelligence Editor. I believe this feature will save you a lot of time. You can learn more about it here: Status Preview.

Disadvantages of Utility AI¶

Although Utility AI does not have many drawbacks of condition-based AI techniques, it has its own disadvantages, such as:

- Scoring decisions accurately can be difficult.

- Decision-target pairs with similar scores may oscillate back and forth as their scores fluctuate.

- The decision-making process in Utility AI may be more expensive than other AI techniques since it needs to evaluate every decision-target pair.

However, if you use Utility Intelligence, we provide tools to address those issues. I don’t dare to say it can completely solve all of them, but it can help to some extent.

- How to use the Status Preview feature to preview the decision scores, and which decision is chosen at editor time.

- How to reduce the oscillation between decision-target pairs

- How to optimize the decision-making process and the score-calculation process

Conclusion¶

You should use Utility AI instead of traditional condition-based AI techniques like BTs, FSMs and GOAP if:

- You don’t want to deal with the drawbacks associated with conditions and transitions.

- You need an AI technique that stays manageable and scalable as complexity grows.

- You want your agents to behave more naturally.

- You want to separate decision-making from decision execution, which allows you to:

- Distribute the decision-making workload across multiple frames or threads.

- Manually trigger the decision-making process when necessary.

You should use traditional condition-based AI techniques if:

- You don’t want to deal with the disadvantages of Utility AI.

- You don’t need the benefits of separating decision-making from decision execution.

- You need something easier to get started with, as Utility AI may harder to learn than other AI techniques.

- Your AI behaviors are not very complex, since the cost of making changes in traditional AI techniques will increase as complexity grows.

- Your agents behaving unnaturally in some cases is acceptable.

References¶

- Building a Better Centaur: AI at Massive Scale

- Improving AI Decision Modeling Through Utility Theory

- Designing Utility AI with Curvature

Created : September 9, 2024